Different Types of Cloud Storage : Choosing the right solution

(this article applies to all Hyperscalars)

We are living in the age of knowledge-driven economics. Information is an asset, and how we share it defines the extent of our success. We have made major developments in the way we share and exchange information, however, the real game changer throughout this shift has been the emergence of cloud technology solutions. Cloud computing and technology — popularly referred to as the cloud — has redefined the way we store and share our information. The providers making such services available are know are Cloud Service Providers or Hyperscalars or Cloud Providers or simply as Providers , etc. The leaders in this space are AWS, GCP, Azure, etc. Cloud Technologies have helped us transcend the limitations of using a physical device to share and opened a whole new dimension of the internet. We shall shortly see the why and how of the above.

(this whitepaper is focused on AWS, but other providers like GCP, Azure, etc. have similar services to AWS)

So then how do we use these digital resources stored in the virtual space – it is by way of networks. It allows people to share information and applications without being restricted by their physical location. We can say that Cloud Computing is the ‘on-demand delivery of IT services and resources over the Internet with a pay-as-you-go pricing model’. Instead of buying, owning, and maintaining physical Data Centers and Servers, you can access technology services, such as computing power, storage, and databases, on an as-needed basis from a cloud provider.

Organizations of every type, size, and industry are using the cloud for a wide variety of use cases, such as data backup, disaster recovery, email, virtual desktops, software development, big data analytics, and customer-facing web applications.

The spending on Cloud Services is going on increasing over the years and it means that in coming days we are going to see more and more clients coming to us for moving their workloads from on-prem onto the Cloud.

So while the spend on Cloud goes on compounding the spend on on-prem remains relatively flat. All predictions around Cloud Computing spending are pointing in the same direction, even if the details are slightly different. And why has it happened, lets see about the reasons Cloud has provided.

Cost –

Cloud computing eliminates the need for physical storage hardware, which

reduces the Capital Expenditure of organizations. So they now do not need to

buy servers. That also eliminates lengthy procurement and big upfront costs.

These funds can then be appropriated towards innovation or research and

development. Also with cloud these firms only need to pay for the resources

that they actually consume. So that leads to no wastage of bandwidth and

resources. The ability to spin up new services without the time and effort

associated with traditional IT procurement should mean that it is easier to get

going with new applications faster. That means reduced go-to-market time

and increase in organizations productivity. For a company with an application

that has big peaks in usage, such as one that is only used at a particular time

of the week or year, it might make financial sense to have it hosted in the

cloud, rather than have dedicated hardware and software lying idle for much of

the time. Only one thing as regards cost, organizations should be mindful of,

is, Cloud computing is not necessarily cheaper than other forms of computing,

just as renting is not always cheaper than buying in the long term.

Scalability – With cloud computing, you don’t have to over-provision

resources up front to handle peak levels of business activity in the future.

Instead, you provision the amount of resources that you actually need. You can

scale these resources up or down to instantly grow and shrink capacity as your

business needs change. Elastic scaling gives the customer the right amount of

resources (e.g., storage, processing power, bandwidth) only when they’re needed

thereby reducing costs.

Setup time – The ability to spin up new services without the time and

effort associated with traditional IT procurement should mean that it is easier

to get going with new applications faster.

Space –

Organizations do not require to host the servers in their facilities, thereby

saving costly infrastructure space since all the servers are hosted in cloud

premises.

Support –

Cloud Providers provide numerous subscription plans and each has varying

degrees of customer support that they provide to help customers to troubleshoot

issues their servers face.

Monitoring – Cloud Providers also provide integrated tools for

monitoring like AWS Cloudwatch, AWS Cloudtrail, etc which continously log

events and help diagnose issues.

Security – Providers deploy numerous security measures to ensure

robust security for their deployed resources, etc.

Self Service – The Cloud platform as such is self service and is easy for

users to provision resources they require.

Disaster Recovery – Cloud Providers ensure that the infrastructure is highly

reliable with support for all types of disasters. Disaster Recovery plans allow

end users to specify the fall back actions, backup, etc. in case of failures.

Auto-updates – Cloud Providers take the responsibility of auto updating

their servers, etc. because that ensures high degree of security.

Connectivity – The Global Edge Network and other networks ensure that you

are able to setup your resources and take care of failures, etc.

Collaboration – Multi Cloud configurations are supported to enable high degree of security, etc.

Now let us see what is the infrastructure that AWS maintains

across the globe.

Amazon Cloud Computing resources are

hosted in multiple locations world-wide. These locations are composed of AWS

Regions, Availability Zones, and Local Zones, etc.

An AWS Region is a

separate geographic area designed to be isolated from the other AWS Regions.

This design achieves the greatest possible fault tolerance and stability. When

you view your resources, you see only the resources that are tied to the AWS

Region that you specified. This is because AWS Regions are isolated from each

other, and we don't automatically replicate resources across AWS Regions.

Availability Zones (or AZs) allow you to place

resources (such as compute and storage) in multiple locations closer to your

actual end users. Each AWS Region has multiple, isolated AZs. When you

launch an instance, you select a Region and a Virtual Private Cloud (or VPC),

and then you can either select a subnet from one of the AZs or AWS chooses one

for you. If you distribute your instances across multiple AZs and one instance

fails, you can design your application so that an instance in another AZ can

handle requests. You can also use Elastic IP addresses to mask the failure of

an instance in one AZ by rapidly remapping the address to an instance in

another AZ.

A Local Zone is an

extension of an AWS Region that is geographically close to your users. You can

extend any VPC from the parent AWS Region into Local Zones. To do so, create a new

subnet and assign it to the AWS Local Zone. When you create a subnet in a Local

Zone, your VPC is extended to that Local Zone. The subnet in the Local Zone

operates the same as other subnets in your VPC.

Additionally AWS has Outposts and Wavelength Zones. AWS Outposts brings native AWS services, infrastructure, and operating models to virtually any data center, co-location space, or on-premises facility. Wavelength Zones allow developers to build applications that deliver ultra-low latencies to 5G devices and end users. Wavelength deploys standard AWS compute and storage services to the edge of telecommunication carriers' 5G networks.

So that was an intro about Regions and AZs. Additionally AWS has

the concept of a ‘Global Edge Network’ – It’s a reliable, low latency and high

throughput network connectivity.

To deliver content to end users with lower latency, AWS uses a

global network of 600+ Points of Presence setups and 13 Regional Edge Caches in

100+ cities across 50 countries. AWS Edge Locations are located in the

locations marked in this diagram.

AWS pairs with thousands of Tier 1/2/3 telecom carriers globally, and is well connected with all major access networks for optimal performance. It has hundreds of Terabits of deployed capacity. AWS Edge Locations are connected to the AWS Regions through the AWS network backbone - fully redundant, multiple 400GbE parallel fiber that circles the globe and links with tens of thousands of networks for improved origin fetches and dynamic content acceleration also known as Transfer Acceleration.

As in this diagram, 1 VPC belongs to one Region and 1 Subnet belongs to one AZ.

And this is how a typical Enterprise application will roughly look like after migrating on the Cloud.

More and more organizations, enterprises, and small and mid-sized

businesses are trusting their data storage to the Cloud. It Enables Greater

Cost Savings, Reliability, and Predictability. The Cloud-First strategy leads

to cost benefit and that happens because:

- Reduced Capital Expenditure on setup cost

- Reduced Operation Expenditure

- Cloud Providers operate at a massive scale, benefiting from economies of scale that smaller organizations cannot achieve. They invest in energy-efficient infrastructure, and it is by sharing of these resources among multiple customers, that they achieve the economies of scale, resulting in energy and cost savings, reduced license fees, global network of data centers, auto-updates and patches. They then can pass on cost savings to customers.

- Effective pricing model allowing pay-as-you-go and pay-per-use

- Effortless scaling as per demand

- Cloud providers handle software updates and security patches, reducing the workload on any separate IT teams. This also ensures that systems are always up to date and secure.

- Cloud Providers provide security services in a Shared Responsibility model. Enabling the security features to secure your data can lead to reduced costs expended on data breaches.

- Cloud services enable rapid deployment of IT resources. This agility can lead to faster time-to-market for new products and services, potentially increasing revenue.

- Easy to setup replication and disaster recovery and backup of the data and servers. Having your data replicated across your Cloud Service Provider's network of servers gives your data greater resilience in case of emergency or system failure. And less downtime means money saved.

Free Tier – It allows you to get a free hands-on experience with AWS products and services.

On-Demand - Pay-as-you-go pricing is simple with no upfront fees.

On-Demand Instances let you pay for compute capacity by the hour or second

(minimum of 60 seconds). Pricing is from the time an instance is launched until

it is terminated. All Data Transfer In is free and not charged. Data

Transfer OUT From Amazon EC2 To Internet is charged. AWS customers receive

100GB of data transfer out to the internet free each month, aggregated across all

AWS Services and Regions . As the data transfer increases the prices

reduces from $0.09 per GB for First 10 TB / Month to $0.05 per GB for Greater

than 150 TB data transfer/ Month. Data Transfer OUT From Amazon EC2 To other

AWS services is still cheaper with $0.02 per GB.

Spot Instance - With Spot Instances, you can use

use Amazon EC2 at discounts of up to 90% of On-Demand pricing. Spot Instances

are used for various fault-tolerant and flexible applications like stateless

web servers, big data and analytics applications, containerized workloads, and

other flexible workloads. Spot Instances perform exactly like other EC2

instances while running. However, they can be interrupted by Amazon EC2 when

EC2 needs the capacity back. When EC2 interrupts your Spot Instance, it either

terminates, stops, or hibernates the instance, depending on the interruption

behavior that you choose. If EC2 interrupts your Spot Instance in the first

hour, before a full hour of running time, you're not charged for the partial

hour used. However, if you stop or terminate your Spot Instance, you pay for

any partial hour used (as you do for On-Demand or Reserved Instances).

Reserved Instance - Amazon EC2 Reserved Instances (RI) provide a significant

discount (up to 72%) compared to On-Demand pricing and provide a capacity

reservation when used in a specific AZ. While purchasing Reserved Capacity you

need to specify term length along with Instance type, platform, payment option,

and offering class.

Savings Plan - Savings Plans is a flexible pricing model that can help you reduce your bill by up to 72% compared to On-Demand prices, in exchange for a one- or three-year spend commitment. AWS offers three types of Savings Plans: Compute Savings Plans, EC2 Instance Savings Plans, and Amazon SageMaker Savings Plans. Compute Savings Plans apply to usage across Amazon EC2, AWS Lambda, and AWS Fargate. The EC2 Instance Savings Plans apply to EC2 usage, and SageMaker Savings Plans apply to SageMaker usage. Once you sign up for a Savings Plan, your compute usage will automatically be charged at the discounted Savings Plans prices and any usage beyond your commitment will only be charged at regular On Demand rates.

The AWS Pricing Calculator is an estimation tool that provides an approximate cost of using AWS services based on the usage parameters that you specify in the calculator. It is not a quote tool, and does not guarantee the cost for your actual use of AWS services. It provides only an estimate of your AWS fees and doesn't include any taxes that might apply. Your actual fees depend on a variety of factors, including your actual usage of AWS services.

There are 3 main types of Cloud Computing Service Models – namely

Software as a Service, Platform as a Service and Infrastructure as a Service

SaaS –

SaaS provides you with a complete product that is run and managed by the

service provider. Welknown example is Gmail.

PaaS –PaaS

removes the need for you to manage underlying infrastructure (usually hardware

and operating systems), and allows you to focus on the deployment and

management of your applications. This helps you be more efficient as you don’t

need to worry about resource procurement, capacity planning, etc. PaaS

includes everything that is needed to build and run an application, such as a

web server, database, and development tools. Well known PaaS providers include

Heroku, AWS Elastic Beanstalk, and Google App Engine.

IaaS – IaaS contains the basic building blocks for cloud. It allows renting of all the basic building blocks of cloud computing, such as networking, computers (virtual hardware), and data storage space. IaaS gives you the highest level of flexibility and control over your IT resources.

"X as a service" (rendered as *aaS in acronyms) is a phrasal template for any business model in which a product is offered to the customer as a subscription-based service rather than as an artifact owned and maintained by the customer. Originating from the Software As A Service concept that appeared in the 2010s with the advent of Cloud Computing, the template has expanded to numerous offerings in the field of information technology and beyond it. The term XaaS can mean "Anything as a Service". It means some feature is being delivered or served to an organization through a remote connection from a third-party provider, as opposed to a feature being managed on site and by in-house personnel alone.

Examples are – DBaaS (Database as a Service), FaaS (Function as a Service), NaaS (Network as a Service), IDaaS (Identity as a Service), etc.

Compute Services – EC2, Lambda, Elastic Beanstalk. Amazon Elastic Compute Cloud (Amazon EC2) provides on-demand, scalable computing capacity in the Amazon Web Services (AWS) Cloud. AWS Lambda, allows you to run code without provisioning or managing servers. You pay only for the compute time that you consume—there's no charge when your code isn't running. As a user, your responsibility is to just upload the code and Lambda handles the rest. Amazon Elastic Beanstalk is an AWS service used for deployment and scaling of web applications developed using Java, PHP, Python, Docker, etc. You just need to upload your code and the deployment part is handled by Elastic Beanstalk (from capacity provisioning, load balancing, and auto-scaling to the application health monitoring is all managed internally). It is the best service for developers since it takes care of the servers, load balancers, and firewalls.

Database Services – RDS, DynamoDB. Amazon RDS (Relational Database Service) is

a managed database for PostgreSQL, MariaDB, MySQL, and Oracle. There’s no need

to install and manage the database software. DynamoDB is a serverless, document

database key-value NoSQL database that is designed to run high-performance

applications. It can manage up to 10 trillion requests on a daily basis and

support thresholds of more than 20 million requests per second. DynamoDB has

built-in security with a fully-managed multi-master, multi-region, durable

database, and in-memory archiving for web-scale applications.

Storage –

S3, EBS, EFS. Amazon S3 is an object storage. It makes it easy

to store data anywhere on the web and access it from anywhere. It has robust

access controls, replication and versioning controls. Amazon EBS is

a block storage solution specifically designed for Amazon EC2. You can handle

diverse workloads. You get to choose between five different volume types so as

to achieve effectiveness and optimum cost. Amazon EFS (Elastic

File System) is a simple and serverless system where you can create and

configure file systems without provisioning, deploying, patching, and

maintaining. It is a scalable NFS file system made for use in AWS cloud

services and on-premises resources. It can scale upto petabytes.

Networking – VPC, Route53, Cloudfront. Amazon VPC enables

you to set up an isolated section of IP addresses where you can deploy AWS

resources in a virtual environment. Using VPC, you get complete access to

control the environment, such as choosing IP address, subset creation, and route

table arrangement. Amazon Route 53 is a highly available

and scalable Domain Name System (DNS) web service. You can use Route 53 to

perform three main functions in any combination: domain registration, DNS

routing, and health checking. Amazon CloudFront is a web

service that speeds up distribution of your static and dynamic web content,

such as .html, .css, .js, and image files, to your end users. CloudFront

delivers your content through a worldwide network of data centers called Edge

Locations.

Security –WAF, AWS Shield, ACM. AWS WAF is a Web Application Firewall that lets you monitor the HTTP requests that are forwarded to your protected web application resources. It lets you control access to your content. Based on criteria that you specify, such as the IP addresses that requests originate from or the values of query strings, the service associated with your protected resource responds to requests either with the requested content, with an HTTP 403 status code (Forbidden), or with a custom response. Amazon CloudFront, AWS Shield, AWS Web Application Firewall (WAF), and Amazon Route 53 work seamlessly together to create a flexible, layered security perimeter against multiple types of attacks including network and application layer DDoS attacks.

There are 4 main ways to access the AWS services, namely – AWS Console, AWS CLI, AWS Cloudshell, AWS SDK.

The AWS Management Console is a web-based interface that allows users to interact with AWS services through a graphical user interface (GUI). It's designed for users who are new to AWS and provides an easy-to-use interface for managing AWS resources.

AWS CLI allows you to access the same AWS resources, but from the

command line on your machine, be it a Windows, Mac or Linux. Most of the

everyday tasks that can be done with the Console, can also be done with the CLI.

The AWS Software Development Kits (SDKs) are libraries that enable developers to interact with AWS services from the enterprise applications. The SDKs are available in several programming languages, including Java, Python, .NET, and JavaScript. They enable us to develop and deploy applications on AWS.

S3 - Amazon S3 is an object storage, it makes it easy to store data anywhere on the web and access it from anywhere. It has robust access controls, replication and versioning controls.

EBS -

Amazon EBS is a block storage solution specifically designed for Amazon EC2.

You can handle diverse workloads. You get to choose between five different

volume types so as to achieve effectiveness and optimum cost.

Elastic File System –Amazon EFS (Elastic File System) is a simple and serverless

system where you can create and configure file systems without provisioning,

deploying, patching, and maintaining. It is a scalable NFS file system made for

use in AWS cloud services and on-premises resources. It can scale upto

petabytes.

AWS Backup – AWS Backup is a fully-managed service that enables to

configure backup policies and monitor activity for your AWS resources in one

place. It allows you to automate and consolidate backup tasks.

FSx –

Amazon FSx enables us to launch, run, and scale feature-rich, high-performance

file systems in the cloud. You can choose between four widely-used file

systems: Lustre, NetApp ONTAP, OpenZFS, and Windows File Server.

Amazon S3 Galcier – With Amazon S3 Glacier (S3 Glacier) you can create vaults

and archives. A vault is a container for storing archives, and

an archive is any object, such as a photo, video, or document, that

you store in a vault. Its different from the S3 Storage class by the name

Glacier

AWS Storage Gateway – AWS Storage Gateway is a service that connects an on-premises software appliance with cloud-based storage to provide seamless and secure integration between your on-premises IT environment and the AWS storage infrastructure in the AWS Cloud.

Amazon S3

provides features so that you can optimize, organize, and configure access to

your data to meet your specific business requirements. You can store any number

of objects in a bucket and can have up to 100 buckets in your account. To

request an increase, you can visit the Service Quotas console. Every

object contained in a bucket is addressable by its corressponding

URL. When you create a bucket, you enter a bucket name and choose the AWS

Region where the bucket will reside. After you create a bucket, you cannot

change the name of the bucket or its region. Objects are the fundamental

entities stored in Amazon S3. Objects consist of object data and metadata. The

metadata is a set of name-value pairs that describe the object. These pairs

include some default metadata, such as the date last modified, and standard

HTTP metadata, such as Content-Type. You can also specify custom metadata

at the time that the object is stored. An object is uniquely identified within

a bucket by a key

(name) and a version

ID (if S3 Versioning is enabled on the bucket).

S3 Standard – The default storage class. If you don't specify the

storage class when you upload an object, AWS assigns the S3 Standard storage

class.

Amazon S3 Express One Zone - is a high-performance, single-zone

storage class that is purpose-built to deliver consistent, single-digit

millisecond data access for your most latency-sensitive applications. S3

Express One Zone is the lowest latency cloud object storage class available

today, with data access speeds up to 10x faster and with request costs 50

percent lower than S3 Standard. S3 Express One Zone is the first S3 storage

class where you can select a single AZ with the option to co-locate your object

storage with your compute resources, which provides the highest possible access

speed.

S3 Intelligent-Tiering - You can store data with changing or unknown

access patterns in S3 Intelligent-Tiering, which optimizes storage costs by

automatically moving your data between four access tiers when your access

patterns change. These four access tiers include two low-latency access tiers

optimized for frequent and infrequent access, and two opt-in archive access

tiers designed for asynchronous access for rarely accessed data. S3

Intelligent-Tiering automatically stores objects in three access tiers:

Frequent Access – Objects that are uploaded or

transitioned to S3 Intelligent-Tiering are automatically stored in the Frequent

Access tier.

Infrequent Access – S3 Intelligent-Tiering moves

objects that have not been accessed in 30 consecutive days to the Infrequent

Access tier.

Archive Instant Access – With S3 Intelligent-Tiering, any

existing objects that have not been accessed for 90 consecutive days are

automatically moved to the Archive Instant Access tier.

S3 Standard-IA and S3 One Zone-IA storage classes are designed for

long-lived and infrequently accessed data. (IA stands for infrequent access.)

S3 Glacier Instant Retrieval, S3 Glacier Flexible Retrieval, and S3 Glacier Deep Archive storage classes are designed for low-cost data archiving.

Below are the applications suited for Amazon S3 Express One

Zone.

Amazon Elastic Block Store (Amazon EBS) provides block level storage volumes for use with EC2 instances.

You create an EBS volume in a specific

AZ, and then attach it to an instance in that same AZ. To make a volume

available outside of the AZ, you can create a snapshot and restore that

snapshot to a new volume anywhere in that Region. You can also copy snapshots

to other Regions and then restore them to new volumes there, making it easier

to leverage multiple AWS Regions for geographical expansion, data center

migration, and disaster recovery. EBS provides the following volume types:

General Purpose SSD, Provisioned IOPS SSD, Throughput Optimized HDD, and Cold

HDD. EBS volumes persist independently from the running life of an EC2

instance. You can attach multiple EBS volumes to a single instance. You can say

EBS in analogous to a pen drive.

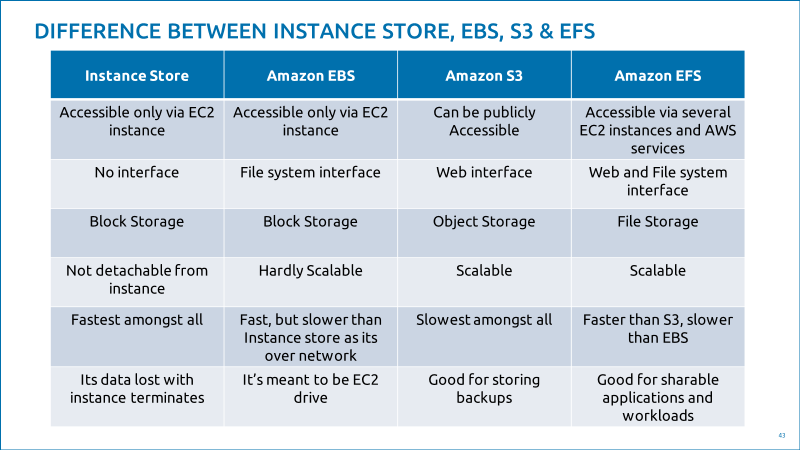

Lastly below is a comparison tables: